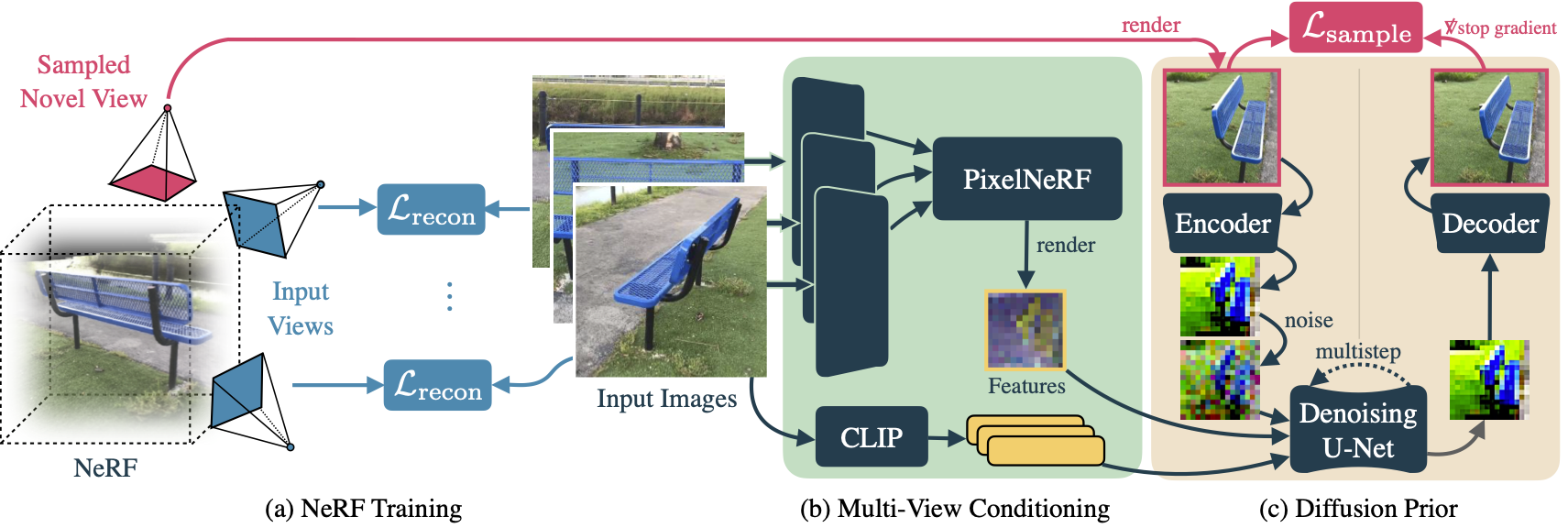

Experience photorealistic 3D scene reconstruction like never before with ReconFusion. Superpassing the traditional Neural Radiance Fields (NeRFs), famed for their ability to render lifelike views of intricate scenes, ReconFusion streamlines the lengthy capture process significantly. Using just a few snapshots, it applies a diffusion prior to the NeRF-based 3D reconstruction pipeline, producing realistic geometry and texture—even in underrepresented areas—while maintaining the look of observed regions.

Built upon synthetic and multiview datasets, this one-of-a-kind method offers synthesis for novel view, extending its functioning at unique camera poses, beyond the scope of the input images. We’ve put ReconFusion to the test on a multitude of real-world datasets with forward-facing and 360-degree scenes. The results? A game-changing performance leap, outpacing previous few-view NeRF reconstruction methodologies. With ReconFusion, enjoy more precision and less hassle with every 3D reconstruct.

ReconFusion distills a consistent 3D model from inconsistent samples

ReconFusion outperforms other few-view NeRF methods

enhance the photo